Composition Forum 20, Summer 2009

http://compositionforum.com/issue/20/

Self-Assessment As Programmatic Center: The First Year Writing Program and Its Assessment at California State University, Fresno

Abstract: This profile presents an overview of CSU, Fresno’s writing program and its program assessment endeavors. It argues that one way to achieve effective program assessment and a complimentary writing program is to engender a culture of self-assessment at all levels.

Reading the world always precedes reading the word, and reading the word implies continually reading the world . . . however, we can go further and say that reading the word is not preceded merely by reading the world, but by a certain form of writing it or rewriting it, that is, of transforming it by means of conscious, practical work . . . To sum up, reading always involves critical perception, interpretation, and rewriting of what is read. (Freire and Macedo 35-36)

Freire’s famous rendition of the process of literacy sums up the central philosophy and pedagogy of the First Year Writing (FYW) program, which is also a directed self-placement (DSP) program, and its assessment at California State University, Fresno. In fact, Freire defines literacy as a reading and assessment practice. These are at the heart of the writing program’s curriculum. We are currently finishing our third year (AY 2008-09) in a five year pilot. My good colleagues (Rick Hansen, Virginia Crisco, and Bo Wang) who designed and proposed our pilot before I was hired formulated much of the philosophy, structure, and curricula; however, nothing had been done to assess the program yet—one of the reasons they hired me. Outcomes had been developed, but there were too many to measure, and they weren’t associated closely with any of the courses students took in the program. Additionally, we had not yet developed a formal mission statement or goals that could be used to support the outcomes we were promoting. So in the process of designing and implementing the program assessment in the first year that I came on board (AY 2007-08), these articulations were developed as well. Much like our students, we as a writing program are continually coming to know ourselves, continually becoming “literate” about our teaching, our curriculum, and our students’ learning.

The focus of this profile, then, is this: The important shaping force of our FYW program and its program assessment is the concept of self-assessment itself as integral to the process of literacy, much like Freire’s account of reading the world and word. Freire’s articulation emphasizes two characteristics that define our program and students’ learning: (1) reading and writing are joined practices; and (2) self-assessment practices (“interpretation” and “rewriting” in Freire’s conception) are equally important to reading-writing processes.

So self-assessment is the center of our writing program’s curriculum. It is why we chose a program portfolio as the key artifact to make important pedagogical decisions and programmatic measurements. Self-assessment is a key to how we teach reading practices, and writing practices, and it’s the gateway into our program. DSP demands, of course, that students self-assess. Once they’re in the program, the portfolio asks them to continue to self-assess their reading and writing practices in order to understand and measure their own progress, and we give them programmatic opportunities to assess others. Assessment of other’s work as a reading practice is set up as important in learning how to write better, write in community, understand practice as more than pragmatic, as reflective and theoretical. Additionally, since we’re a pilot, and DSP is mostly an untested assessment technology, self-assessment is also the way we approach the on-going maintenance, administration, and inquiry into the program and the validity of its course placements.

I have a larger argument in this profile, however. Centering writing programs on the concept of self-assessment as a defining aspect of the processes of literacy helps create an important culture of praxis, a culture of self-assessment, in the classroom and writing program.{1} If we (our students and writing programs themselves) are always becoming literate, always coming to understand our own practices, then we are always in the process of self-assessment, making the two processes, literacy and self-assessment, one and the same practice. As in our program, a centering on self-assessment asks that teachers and students turn their energies and time not to perfecting products but articulating reflexive, effective, and flexible reading and writing practices, rhetorical activities that are also self-assessment activities.

I should distinguish briefly three elements in our program that may be confusing to some in my discussion. The program portfolio that we use at CSUF is not synonymous with program assessment, nor is DSP synonymous with program assessment. Each of these elements (program assessment, portfolio, and DSP) is separate, but they work together, always serving multiple purposes. Our program assessment may best be characterized as a large set of inquiries and data gathering, while the portfolio is a pedagogical element in classrooms that we happen to use to collect data in order to make program assessments. Meanwhile, the DSP program is ONLY our course placement technology, but it’s one that purposefully sets up the curriculum of self-assessment, epitomized in the portfolio. DSP is an “assessment,” a self-assessment in fact, but it’s only the way in which students get into their writing course(s); the portfolio drives the pedagogy, and the program uses the portfolio as a convenient way to capture student learning. Thus, we feel, the best way to find out whether the DSP is working properly, making appropriate placements (a validation inquiry, which is a kind of program assessment), is to investigate student learning in courses; as a result, most of our direct evidence of student learning comes from the portfolio.

The FYW Program

The program’s conception of literacy and its philosophy, mission statement, program goals, and learning outcomes will make clear why we measure what we do and why we measure in the ways we do, i.e., why program assessment and self-assessment are central and defining of our writing program.

Our FYW program is relatively large. In AY 2007-08, we enrolled 1,762 students in Engl 5A and 844 in Engl 10.{2} Historically, many of CSUF’s students have lacked confidence in their reading and writing abilities, as many first year students do. Our students, however, come with a variety of home languages, from schools that often do not ask them to read or write much or write in the ways we ask of them, from working-class backgrounds, and as first-generation college students. As many of our long-standing writing teachers have attested to, our students need particular kinds of reading and writing practices in the right kind of environment. The DSP and the FYW program’s philosophy were designed to respond to at least three important historical issues shaping the FYW program in the past:

- the need to reduce the program’s reliance on an outside, standardized placement test because it is not valid enough for our writing placement purposes (e.g., the EPT, SAT, etc.), and because in spite of our students’ scores, the vast majority complete successfully their writing courses when given the right educational atmosphere, pedagogies, curriculum, and responsibilities;{3}

- the need that students have to place themselves and gain agency and responsibility over their educational paths in the university; that is, research shows that when students feel responsible for their own choices, when they’ve chosen their classes, they tend to be more invested in them, and succeed in higher numbers;{4}

- the need to give students credit for all of the writing courses they take since university credit acknowledges their work, does not penalize students for wanting extra practice in writing, and reduces the institutional and social stigma of “remedial” writing courses.{5}

These historical exigencies helped us rethink our assumptions about literacy and its teaching in the program. Our FYW program promotes a philosophy that understands literacy as social practices sanctioned by communities (Volosinov 13); thus, learning “academic literacy” (however one wishes to define the concept) effectively will demand social processes that call attention to the way the local academic communities and disciplines value particular rhetorical practices and behaviors when reading and writing. The “academic literacy” we promote in our program, then, is a self-conscious process of discursive practices and strategies that acknowledge their roles in societal power structures, very akin to James Paul Gee’s conception of “powerful literacy” (Gee 542). Gee calls these power structures “dominant” or “secondary” discourses, acquired typically in institutions, such as schools (Gee 527-28). However, our program’s concept of literacy is also informed by Lisa Delpit’s important critique of Gee. She argues that students of color and other groups who come to our classrooms from discourse communities distant to the academic discourse taught are not socially predetermined to never quite “get in” to the club (Delpit 546). Delpit discusses several examples that disprove Gee’s claim, such as Bill Trent, a professor and researcher who came from a poor, inner-city household in Richmond, Virginia, and whose mother had a third grade education and father had an eighth grade education (548). Additionally, Delpit argues against Gee’s claim that someone “born into one discourse with one set of values may experience major conflicts when attempting to acquire another discourse with another set of values” (546-47), which often leads teachers to not teach dominant literacies, in our case, conventional academic literacy found in texts like Graff and Berkenstein’s They Say / I Say textbook.{6} Delpit’s criticism of Gee is important to our program’s concept of academic literacy because CSU, Fresno is a member of the Hispanic Association of Colleges and Universities (HACU), with large populations of Latino/a and Asian Pacific Islanders who come into our classes from discourse communities distant from the one we promote.{7}

Thus the program consciously attempts to acknowledge three things that Delpit highlights in her critique: one, that what we offer our students are academic literacy practices that are connected to dominant discourses and economic power, which are associated with White, middle-class academic English; two, that all who enter have equal claim to these literacy practices, and can learn them; and three, that subordinate discourses (those not taught in the expressed, formal curriculum) are not just a part of the classroom but may transform the curriculum, literacy practices, and the discourse we promote (Delpit 552). This is idealistic, yes, but it’s crucial to the articulation of our program not just because of who enter and reside in our classrooms, but because, as Stanley Aronowitz and Henry Giroux have argued, dominant literacy is “a technology of social control” that “reproduces” social and economic arrangements in society (50). We do not want to blindly reproduce discourses and social arrangements from the assessments of those discourses that may be inequitable and unfair to some, while privileging others.

Our classrooms are primarily working class students of color, many of whom are first-generation college students. Since we do not wish to simply reproduce hegemony by promoting a dominant academic literacy, the process of academic literacy begins with course placement, constructing an environment for engaging students reflectively with the ways power moves through discourses that “legitimat[e] a particular view of the world, and privilege, a specific rendering of knowledge” (Aronowitz and Giroux 51). This critical notion of literacy as reflexive practices that are both hegemonic and counter-hegemonic means our DSP program is vital to the curriculum and our students’ learning. It introduces them, through self-assessment of their own reading and writing practices, to their learning paths in our program and begins to orient them to the new role they must play, one more active, more participatory, and one with more power than they may be used to. From this understanding of literacy, four elements make up the writing program’s philosophy, which centers on literacy, reading, and writing practices:

- Literacy learning is social.

- Reading and writing are connected processes.

- Reading and writing are academic practices of inquiry and meaning-making.

- Reading and writing practices are shaped by and change based on the academic discipline.

The central activities in the program’s philosophy are reflection and self-assessment of reading and writing practices, which are crucial to developing one’s literacy practices. To be a good reader and writer means ultimately that a writer must be able to see her writing as a product of a field of discourse(s), which means she can assess its strengths, weaknesses, choices, and potential effects on audiences for particular purposes in particular rhetorical situations. It also means she can situate her practices in relation to a community of other readers and writers engaging in similar activities, which mirrors Graff and Birkenstein’s “conversation” metaphor used to describe academic literacy. This additionally means that all students must practice assessment chronically—their literacy processes are not punctuated with self-assessment and reflection but are interlaced with these practices. In a nutshell, good writers are always good self-assessors of their practices. At all levels in our program, (self) assessment is fundamental to the way agents—and the program itself—make decisions, move through the program, understand literacy, and situate, understand, and define themselves in courses through the portfolio.

You can hear this central theme in the program’s mission statement:

The FYW program is committed to helping all students enter, understand, and develop literacy practices and behaviors that will allow them to be successful in their future educational and civic lives. In short, our mission is to produce critical and self-reflective students who understand themselves as reader-writer-citizens.

More specifically, through instruction, community involvement, and a wide variety of other related activities, the FYW program’s mission is to:

- teach and encourage dialogue among diverse students (and the university community) about productive and effective academic reading and writing practices (i.e., academic literacy practices), which include on-going self-assessment processes of students;

- assess itself programmatically in order to understand from empirical evidence the learning and teaching happening in the program, measure how well we are meeting our program and course outcomes, aid our teachers in professional development, and make changes or improvements in our methods, practices, or philosophy.

Our mission is not just to help students learn academic literacy practices. Our mission is also to learn about our program, its students, and our practices. So as a program, we take to heart the practice of self-assessment. It’s central to the way literacy is theorized, taught, and practiced in the classroom, and it’s central to the way we approach ourselves as an evolving program also learning about itself.

Finally, there are three primary learning goals for the entire program, and eight learning outcomes. During the pilot, we measure only five of these outcomes, central ones. They are articulated as follows:{8}

- READING/WRITING STRATEGIES: Demonstrate or articulate an understanding of reading strategies and assumptions that guide effective reading, and how to read actively, purposefully, and rhetorically;

- REFLECTION: Make meaningful generalizations/reflections about reading and writing practices and processes;

- SUMMARY/CONVERSATION: Demonstrate summarizing purposefully, integrate “they say” into writing effectively or self-consciously, appropriately incorporate quotes into writing (punctuation, attributions, relevance), and discuss and use texts as “conversations” (writing, then, demonstrates entering a conversation);

- RHETORICALITY: Articulate or demonstrate an awareness of the rhetorical features of texts, such as purpose, audience, context, rhetorical appeals, and elements, and write rhetorically, discussing similar features in texts;

- LANGUAGE COHERENCE: Have developed, unified, and coherent paragraphs and sentences that have clarity and some variety.

The Structure of the Program

The FYW program consists of three courses: English 5A, English 5B, and English 10. One either takes Engl 5A-5B or Engl 10 to fulfill the university writing requirement. These three courses amount to three course paths, when adding Ling 6 (discussed below), taught in another department. Students place themselves into FYW through a directed self-placement (DSP) model. {9} According to Daniel Royer and Roger Gilles, the first to design, administer, assess, and publish results on directed self-placement (“Directed Self-Placement”), DSP “can be any placement method that both offers students information and advice about their placement options (that’s the ‘directed’ part) and places the ultimate placement decision in the students’ hands (that’s the ‘self-placement’ part)” (Royer and Gilles, “Directed Self-Placement” 2). In our model, students make the placement decision based on two kinds information that we provide: One, a set of course outcomes, and two, prompts that ask them to consider their own reading and writing histories and behaviors. Students place themselves into one of three options:

- Option 1: English 10, Accelerated Academic Literacy. This is an advanced class, and students who choose this option typically are very competent readers and writers, ready to read complex essays, develop research supported analyses, and complete assignments at a faster pace. Generally, these students have done a lot of reading and writing in high school and feel comfortable with rules of spelling, punctuation, and grammar. This course starts with longer assignments (5-7 pages) and builds on students’ abilities to inquire, reflect, compose, revise, and edit.

- Option 2: English 5A/5B, Academic Literacy I & II. These courses “stretch” the reading and writing assignments over two semesters and have the same learning outcomes as English 10. Students in this option often do not do a lot of reading and writing (in school or outside of it) or may find reading and writing difficult. Students get to be with the same teacher for both courses (a full year). The first semester (Engl 5A) starts with shorter assignments, focusing on reading practices, and moves toward more complex reading and writing at semester’s end. The second semester (Engl 5B) builds on work in 5A and leads students through longer and more complex reading and writing tasks. Finally, these courses focus more on researching, citing, and including sources correctly in writing, with more direct instruction in language choice, sentence variety, and editing.

- Option 3: Linguistics 6, Advanced English Strategies for Multilingual Speakers, then English 5A & 5B. The first class (Ling 6) assists multilingual students with paraphrasing and summarizing while also providing help with English grammar. Students who take this course usually need instruction that addresses the challenges second language learners face with academic reading, writing, grammar, and vocabulary. Students in this option tend to use more than one language, avoid reading and/or writing in English, and/or have a hard time understanding the main points of paragraphs or sections of a text. This course, Ling 6, focuses on increasing reading comprehension while developing a broader English vocabulary. This class builds language skills through short readings and writing assignments that prepare them for English 5A and 5B.In the AY 2007-08, the majority of our students selected option 2, the English 5A-5B sequence (the stretch program). Of the 2,606 enrollments in the year, 1,762 (68%) enrolled in Engl 5A, while 844 (32%) enrolled in Engl 10. Only a handful of students each year choose option 3, usually after enrolling in option 2. Because Ling 6 is not taught in our program or by our teachers, I will not speak at all about this course. We gather little data on it. Ling 6 is very small, and not managed by our FYW program.

Program Portfolio As Pedagogy and Program Assessment Device

The central device we use to guide the program’s pedagogies and assess directly student learning along program outcomes is the program portfolio. Portfolios have been shown in many places to emphasize student agency, control, selection, development along dimensions/outcomes, and reflection/self-assessment (Hamp-Lyons and Condon; Elbow and Belanoff), each of which are important to our program.{10} Drawing on Sylvia Scribner’s “literacy metaphors,” Belanoff argues that portfolio pedagogies can provide a richer environment for literacy practices. They can account for “literacy as adaptation” to a dominant discourse, “literacy as power” through practicing dominant forms, and “literacy as grace” by participating socially in human meaning-making activities (13), each of which is an important practice for our program.

Because the central component of the assessment technology that seeks to understand student learning in our program is the program portfolio and the activities and agents that surround it, the portfolio is required of all students in all three courses and has a set of common requirements dictated by the curriculum. All courses require common sets of assignments (projects) by course, which are used to fill the program portfolio. While documents, assignments, and prompts may vary in terms of readings and details, each project must center on a specified set of outcomes (listed above in the previous section). In fact, we provide all teachers with “template” assignment sheets that articulate the main features and outcomes of each project, which allows the teacher to modify and adjust invention and warm-up assignments.{11}

At midterm and final, a sampling of portfolios is gathered by the program as direct evidence of student learning. The evidence from portfolios is three-fold:

- Independent Ratings: Over each summer, we rate portfolios along the five outcomes listed previously (i.e., reading/writing strategies, reflection, summary/conversation, rhetoricality, and language coherence). These blind ratings are done by teachers in the program, but not the teacher of record.

- Teacher Ratings (Competency Measures): We capture teachers’ ratings (in the semester) from these same portfolios by the teachers of record. I call these “competency measures” because the idea is to gather a more contextually sensitive rating of the portfolios and writing competence in the course—a contextual judgment. Since teachers know the students well, how others are performing in the class, and have a sense of where each portfolio as a presentation of learning fits into their particular class, these ratings, I argue, are competency measures and may be different from the independent ratings.

- Student-Peer Ratings: Additionally, since self-assessment, student agency and control, and critical understanding of literacy practices are important to the philosophy, mission, goals, and outcomes of the program, all students engage in peer portfolio assessments at midterm and final, which ask them to do two things: (1) read and write descriptive assessments along each program outcome that begin with evidence building from the reading of peer portfolios and end by making descriptive judgments along each outcome; and (2) rate each portfolio along each program outcome with the teacher in class after the descriptive assessments are finished.

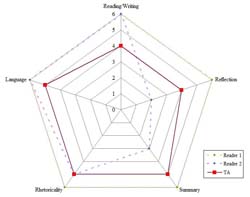

Figure 1: Example radar graph used to discuss difference in portfolio ratings with students. View larger image.

Figure 1: Example radar graph used to discuss difference in portfolio ratings with students. View larger image.

The program uses all three measures from portfolios to get a rich sense of how well students are learning from three important stakeholders: outside teachers who teach in the program, the teacher of record, and the students themselves. This year is the first year we’ve tried the student-peer ratings, so we are looking forward to seeing what this tells us about the other ratings gathered. What we hope to find is that students’ ratings come close to those of their teachers and/or outside teachers, which would suggest that they are able to reflect upon and self-assess their own writing practices. And if they diverge noticeably from teachers’ ratings, then this provides important pedagogical information for building curricula and lessons, rethinking our own judgments, etc. Additionally, folding this third layer of evidence into our program assessment also builds the construct and content validity (and fairness) of the portfolio by allowing students to become agents in the assessment of their own learning through practicing assessment as a Freirian reading practice. Teachers also use student ratings in a variety of ways in their classes to discuss the midterm portfolio assessments, program outcomes, portfolio documents, self-assessment as a practice, among other things. For instance, one teacher, Matthew Lance (a graduate student), recently shared with other teachers his practice of radar graphing the portfolio ratings (using Excel) by students and the teacher as a tool to discuss disagreement and variance in multiple readings of portfolios in follow-up student conferences. Each graph forms a pentagon of various sizes, depending on the ratings of that one reader (see Figure 1). Larger pentagons show higher ratings, while misshaped ones suggest the reader sees weakness in one or more areas and strength in others. All three pentagons are mapped together to show graphically difference among readers along outcomes. The radar graphing simplifies the judgments, so it is mostly useful for starting and focusing discussions about the descriptive assessments of each reader. It points out variance and the degree of variance in a simple but provocative way, a way that students and teacher can use to begin conversations about ratings, self-assessments, and outcomes.

Assessment Research Methods

Ultimately, the program assessment that I designed is robust, mainly because I wanted to make sure that we had plenty of data, from multiple sources, to be able to argue the effectiveness of our pilot DSP, the validity of its course placements, the appropriateness of decisions to pass students through the program, and I wanted to understand the learning occurring in the new writing curriculum that we instituted at the same time as the pilot DSP. In a nutshell, the program gathers the following information each semester from a sample of students (approximately 25% of the total students enrolled in our program):

- Entry and exit course surveys that ask about satisfaction and course placement accuracy;

- Independent Portfolio ratings that are conducted in the summers and by teachers teaching in the program (discussed above);

- Portfolio competency measures that take ratings from the teacher of record (discussed above);

- Student-Peer portfolio ratings that gather ratings from students, allow them to exercise assessment practices purposefully, and provide voice in the program assessment (discussed above);

- Student progress measures in courses that gather basic information at midterm and final about each student’s general progress in the course, identifying simply “passing,” “not passing,” or “borderline passing”;

- Course grade distributions in all FYW courses that provide an indirect measure of student learning, as well as a way to see how the portfolio and our curriculum are affecting students’ chances for continuing education (persistence/retention in the university) and their educational paths in the university (degree of success);

- Passing rates in all FYW courses, which are a function of grade distributions;

- Frequent ongoing projects/measures of various kinds that look at particular aspects of the program that beg for inquiry; these are often one year or semester, short-term data gathering projects.

All of the above evidence that we gather and I consider when assessing the program and DSP decisions are first separated by DSP option (i.e., Engl 5A-5B and Engl 10), then in most cases analyzed along three lines: race, gender, and generation of student (i.e., first generation or continuing generation).

The final category of ongoing special projects in the program has varied over the last year or so. In the Spring 2008 semester, for instance, a group of graduate students (most of whom were TAs) and I gathered teacher commenting data on student midterm portfolio drafts to measure empirically the kinds, quality, quantity, and frequency of teachers’ comments on various racially identified student drafts. This began in my Composition Theory seminar and culminated in a CCCC roundtable discussion in San Francisco in 2009. Since then, it has spawned three theses projects, which also will be folding into our future program assessments. The first of these follow-up projects is an empirical look at the rhetoric of reflection in student portfolios in our program. The second is a closer look at the construction of error in teacher commenting practices on Latino/a writing in the program.{12} The third project is an experiment that attempts to test a hypothesis that was induced from the findings in the initial commenting project: race of students seems to affect the commenting practices of teachers. Additionally, this year, I’ve begun gathering simple survey data and grade distributions from students in the program who are in classes that use grading contracts, something I use and introduced to teachers about a year and a half ago. Our grading contracts guarantee each student a “B” grade in the course as long as she meets certain criteria (negotiated with the entire class). These criteria are based on work done in the spirit it is asked, regardless of quality, which allow teachers and students to deemphasize grades and focus on the more descriptive and formative assessment practices in the classroom, providing more agency and control for students. About 25 teachers use grading contracts in our program each semester, so this is a significant factor that shapes the learning of our students.{13}

I detail these special projects because they highlight one very important aspect of our FYW program: Self-assessment as program culture. Self-assessment is not just a student learning outcome, but it’s half of our program’s mission statement (listed above). This promotes assessment as a set of teacher and student practices that are pedagogical and help us understand ourselves and our growth. It also makes for a robust, dynamic, and pedagogically sound curriculum because it develops a rich corps of teachers who know well the learning in the program and are continually reflecting upon their practices and those of the program. Similar insights about using assessment as a way to build culture in Washington State University’s writing programs have been discussed in Beyond Outcomes (Haswell 2001).

It’s not easy to summarize our findings, so the summary below of last year’s data (AY 2007-08) is by no means complete, but it gives you a general idea of what we learned in the first full year of our program assessment efforts (AY 2007-08).{14}

Assessment Research Findings

In its most basic form, the question our program assessment asks is: “What are our students learning in our program?” or “How well are they learning along our program outcomes?” We also ask: “How appropriate are the DSP placements?” Overall, it appears our students are developing along all of the outcomes we measure in both options 1 (Engl 10) and 2 (Engl 5A/5B), meet our expectations in high numbers in their final portfolios, and are highly satisfied with their DSPs. Additionally, students pass at acceptable rates, persist in the university after our courses, but there is some room for improvement.

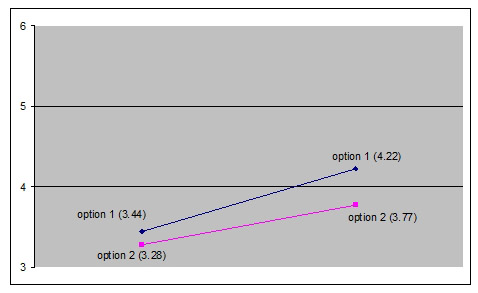

First, the direct evidence from independent portfolios ratings suggest that students are learning along all outcomes. In option 1 (Engl 10) portfolios,

- all students achieved an overall rating of “adequate quality” or better (100%) in final portfolios.{15}

- on average, portfolios improved by .68 points (out of 6 points) in all five of the outcomes measured, with the most improvement between midterm and final portfolios occurring in “Reflection” (.94 out of 6 points), with the second-most improvement occurring in “Summary/Conversation” (.89).

In option 2 (Engl 5A/5B) portfolios,

- the average student met all outcomes, and 95.3% of all students achieved an overall rating of “adequate quality” (rating of 3) or better in final portfolios.

- only 4.7% of all students’ final portfolios were rated of “poor quality” (1 or 2), a 25.3% decrease from 5A midterm.

- students improved by .52 points in all five of the outcomes measured, with the average student score resting in the “proficient” category for each outcome, making the most improvement in “Rhetoricality” (.59) and the second-most improvement occurring in the outcome of “Summary/Conversation” (.55).

Figure 2: Average Overall Ratings for Option 1 and 2 Student Portfolios in Independent Ratings Increase During Their Time in The Program. View larger image.

Figure 2: Average Overall Ratings for Option 1 and 2 Student Portfolios in Independent Ratings Increase During Their Time in The Program. View larger image.

So based on both the resting points for final portfolio ratings and the development students made over the course of their studies, our students, who place themselves in their courses in both options, appear to learn along all dimensions and meet the program expectations in high numbers (see Fig. 2).

Second, the portfolio competency measures (direct evidence of learning) that we gather directly from teachers in the semester suggest similar conclusions. Option 1 portfolios yielded the following results:

- Competency measures for all students showed a very modest improvement of 3.2%, from 83.2% at midpoint to 86.4% at final. This finding appears NOT to agree closely with the numerical portfolio ratings (100%) mentioned above.

- Interestingly, females in option 1 attained higher measures overall, with 85.1% judged competent at midterm and 89.2% at final. This is 7.6% higher than males.

- Black females (and perhaps all Blacks) appear most at risk in our program, achieving overall competency in the fewest numbers at 10 final (72.73%), while Latinos start with higher competency (83.3%) but end similarly as Black females (72.2%).

Option 2 portfolio results were as follows:

- Students showed continual overall improvement (22.7%), from 73.9% at 5A midterm to 96.6% at 5B final.

- Their overall competency measures (96.6% at final) appear to agree closely with the numerical portfolio ratings of the same portfolios (95.3%).

- Unlike option 1, gender DID NOT play a significant factor in competency measures, with males receiving only .5% fewer overall competent judgments than females at 5B final.

- Black males, however, are most at risk in option 2: Black males achieved overall competency in the fewest numbers at 5B final (88.89%), and showed 7.11% fewer overall competent judgments than the next closest group (Latinos).

- Latinos (and Latinas to a lesser degree) may be at risk as well, starting with lower competency measures (60%) but ending comparable to most other groups (96%).

| Race | Option 1: 10 Midterm |

Option 1: 10 Final |

Option 2: 5A Midterm |

Option 2: 5B Final |

|---|---|---|---|---|

| Asian/Pacific Islander (API) | 84.85% | 93.94% | 68.89% | 97.78% |

| Black | 56.25% | 81.25% | 86.67% | 93.33% |

| Latino/a | 88.89% | 83.33% | 69.77% | 96.51% |

| White | 94.29% | 85.71% | 77.27% | 96.59% |

Table 1. Overall Proficiency Ratings on Portfolios by Option and by Race.

Overall competency rates (ratings generated by teachers of record from portfolios) are more evenly distributed at final in option 2, suggesting that the stretch program creates a racial leveling effect in terms of competency read in portfolios. Meanwhile Blacks and Latinos/as perform the worst in option 1 (see Table 1).

Third, our student survey results (indirect evidence of learning) suggest that, generally speaking, students are highly satisfied with their chosen writing courses, which makes for more active and engaged learners. In option 1,

- students grew in satisfaction, from 91.2% at midpoint to 96% at final.

- the vast majority of females (94.6%) started satisfied and slightly more of them ended satisfied (97.3%).

- Whites (97.2%) and APIs (97%) ended up the most satisfied with their DSP choice of all racial groups.

- as perhaps expected, Black males ended up being the least satisfied (80%) racial group.

In option 2,

- students grew in satisfaction with their DSPs during the course of their studies, from 83.9% at 5A midterm to 87.7% at 5B final.

- males achieved higher levels of satisfaction (93.8%) than females (84.5%) by 5B final.

- females remained constant in their satisfaction with their DSPs (84.5%).

- interestingly, 91.1% of all students of color felt mostly or completely satisfied in their DSPs in option 2, with APIs being the most satisfied group (97.8%).

- White females, however, had the lowest satisfaction at most points in the year (5A midpoint: 82.5%; 5B final: 75.4%).

Our survey results suggest that, if student satisfaction is linked to learning and more deeply engaged students (as Reynolds suggests), then our program appears to be cultivating high levels of learning and engagement because of satisfaction levels, with a few groups, particularly Blacks, needing some additional attention.

Fourth, passing rates (indirect evidence) of students suggest indirectly that students are learning adequately along the outcomes we promote, and they are choosing appropriately their courses in our DSP. For option 1,

- 5.1% fewer students passed compared to the previous writing course (Engl 1A) in 2005, a course students were placed into by standardized test scores.

- 6.2% fewer APIs (72.5%), 13.9% fewer Blacks (69%), and 9.5% fewer males (70.4%) passed than did in the previous writing course (Engl 1A).

- regardless of racial group (except for APIs), passing rates appeared to be consistently lower by an average of 10% than overall competency measures, meaning grades seemed to represent students’ competencies consistently regardless of race or gender if competency is considered a criterion validation measure.

Option 1 appears to be more difficult to pass than the previous one semester writing course. For Option 2

- roughly the same percentages of students passed overall (82.4%) as compared to the previous writing course (Engl 1A) in 2005.

- both males and females passed at similar rates (81.1% for males, and 83.3% for females) as the old Engl 1A.

- 4.5% more APIs (83.2%) passed than did in the earlier writing course.

- 9.2% fewer Blacks (73.7%) passed than did in the earlier writing course.

- 13.1% fewer Native Americans (78.6%) passed than did the earlier writing course.

- regardless of group (except for Blacks), passing rates appeared to be consistently lower by an average of 10% than overall competency measures, meaning grades seemed to represent students’ competencies consistently regardless of race or gender in option 2.

Similar to option 1, option 2 is more challenging than the previous one semester course. Our passing rates suggest internal consistency in both options, and suggest that option 2 passes just as many students as the old Engl 1A course, while option 1 fails a few more students. Option 2 also appears to reduce more effectively negative racial and gender formations in passing rates, which agrees with the direct evidence in portfolios mentioned above. While Blacks fail more frequently in both options, they do end highly satisfied with their DSP (see Table 2 for a comparison of passing rates and satisfaction rates by race and option).

| Race | Option 1: 10 Passing | Option 1: 10 Entry | Option 1: 10 Exit | Option 2: 5A Passing | Option 2: 5A Entry | Option 2: 5A Exit | Option 2: 5B Passing | Option 2: 5B Entry | Option 2: 5B Exit | Engl 1A: 1A Passing |

|---|---|---|---|---|---|---|---|---|---|---|

| All | 76.9% | 91.2% | 96.0% | 82.7% | 83.9% | 89.8% | 82.4% | 82.2% | 87.7% | 79.6% |

| APA | 72.5% | 97.0% | 97.0% | 86.4% | 84.4% | 91.1% | 83.2% | 86.7% | 97.8% | 78.7% |

| Black | 69.0% | 87.5% | 93.8% | 75.6% | 86.7% | 93.3% | 73.7% | 86.7% | 93.3% | 82.9% |

| Latino/a | 78.0% | 86.1% | 94.4% | 76.9% | 81.2% | 92.9% | 80.9% | 83.5% | 89.4% | 78.1% |

| White | 82.6% | 91.7% | 97.2% | 86.2% | 85.1% | 85.1% | 83.7% | 77.0% | 80.5% | 81.4% |

| CGS | no data | 90.2% | 97.6% | no data | 84.0% | 85.3% | no data | 80.0% | 85.3% | no data |

| FGS | no data | 91.6% | 95.2% | no data | 83.4% | 91.7% | no data | 82.8% | 88.5% | no data |

| All females | 80.5% | 94.6% | 97.3% | 84.9% | 84.5% | 87.1% | 83.3% | 80.0% | 84.5% | 83.50% |

| APA female | no data | 100.0% | 100.0% | no data | 90.0% | 90.0% | no data | 83.3% | 96.7% | no data |

| Black female | no data | 90.9% | 100.0% | no data | 83.3% | 100.0% | no data | 83.3% | 83.3% | no data |

| Latina | no data | 83.3% | 94.4% | no data | 83.3% | 90.0% | no data | 83.3% | 88.3% | no data |

| White female | no data | 100.0% | 95.7% | no data | 82.5% | 80.7% | no data | 73.7% | 75.4% | no data |

| All males | 70.4% | 88.0% | 94.0% | 79.4% | 82.7% | 95.1% | 81.1% | 86.4% | 93.8% | 79.9% |

| APA male | no data | 92.9% | 92.9% | no data | 73.3% | 93.3% | no data | 93.3% | 100.0% | no data |

| Black male | no data | 80.0% | 80.0% | no data | 88.9% | 88.9% | no data | 88.9% | 100.0% | no data |

| Latino | no data | 88.9% | 94.4% | no data | 76.0% | 100.0% | no data | 84.0% | 92.0% | no data |

| White male | no data | 83.3% | 100.0% | no data | 90.0% | 93.3% | no data | 83.3% | 90.0% | no data |

Table 2. Comparison of passing rates and satisfaction levels in options 1 and 2 and Engl 1A. Abbreviations: APA=Asian Pacific-American; CGS=Continuing Generation Student; FGS=First Generation Student.

Fifth, the office of Institutional Research, Assessment, and Planning (IRAP) at CSU, Fresno recently conducted a one semester comparison of the current writing program to the old Engl 1 course, using retention and passing rates (indirect, comparative evidence of learning). Most interesting in their brief report is the apparent effect that our option 2 (Engl 5A/5B) has on retention rates in the university. The Engl 5A/5B program appears to have a positive effect on student retention rates, especially for those who are designated as needing remediation by the university—that is, our DSP is working BETTER, helping retain university designated remedial students better than the old Engl 1LA (with lab).{16} IRAP’s results compared Fall 2005 student cohorts (the old Engl 1LA) with our new program’s Engl 5A/5B cohorts starting in Fall 2007:

- The old course obtained a 52.3% retention rate among “remedial” designated students who failed their courses; however, this retention rate INCREASES to 74.1% for the Engl 5A/5B cohort of students who failed. So even when our “remedial” designated students fail the stretch program (option 2), they stay in the university in greater numbers—by 21.8%.

- When our 5A/5B students pass our courses, they retain at 96%.

When comparing these findings to our high satisfaction numbers among all groups of students, one could make the argument that when students do well in our writing program, one that gives them choice and agency through the DSP, they stay in the university longer, especially when choosing option 2. Does this mean that DSP actually helps retain “remedial” and at risk students? Do their abilities to make choices in the DSP help them stay in the university, even when they fail? All of our data so far suggest yes. Additionally, Greg Glau, the Director of the Writing Programs at Arizona State University, shows similar patterns in their ten year old stretch program; however, their retention rates are at just over 90% when students pass their stretch courses (42-43).

Finally, these findings are tentative. This is just one year’s data, and the independent portfolio measures discussed above were too small of a sample to make firm conclusions. The present year’s data will allow us a much better sense of how well our students are learning and whether race and gender are factors for success and learning in each option. We’ll also be able to confirm or nuance the positive findings we have already. All this helps us make the argument to keep our program as it is (once our pilot is over in two years) and present our program (and the DSP) as a possible model for other CSU campuses.

These findings tell me that while we are doing a lot right, that most (if not all) teachers are not teaching on islands—and this itself is a big accomplishment given how many teachers and classes we have—there are still cracks in the program, things that need fixing. We have strong indicators, along every data stream, that tell us our program, from the DSP to our curricula, is working for our students, but we also have evidence from just about every source that shows Blacks are most at risk, least satisfied, and fail most often. And yet, it appears that our DSP encourages retention, even when students fail their courses.

What I’ve Learned

I’ve learned quite a bit about program assessment, data gathering, and of course, our writing program at CSU, Fresno from designing and managing our program assessment efforts. Mostly, I’ve learned two things. First, the culture of assessment is crucial if you want a dynamic and robust program assessment, one that affects curriculum and teacher practices, one that students themselves are agents in and not just stakeholders of, one that professionally develops staff, teachers, and, yes, students, and one that’s understandable (in order to change) as a living environment that (re)produces not just academic dispositions but particular social and racial arrangements in the university and community. This last element of our program assessments is the most important for me, not just because it happens to be crucial to my research agenda, but because it is what a university like CSU, Fresno, a member of the HSACU, needs. Additionally, creating such a programmatic culture was not my original goal, but it happened. It’s not uniform across all teachers, and perhaps not as strong as I make it out to be here, but we’re working on stronger training and communication lines, and it appears to be an effective and educative culture. Teachers continually bring and find ways to make assessment pedagogical.

Second, I’ve learned that one cannot conduct good, on-going writing assessment alone. One needs a corps of willing and informed teachers and administrative assistants who see clearly the benefits of a culture of assessment. And that culture of assessment fosters teachers who care about and reflect upon (self) assessment as pedagogy. I have many that work alongside me: the program’s administrative assistant, Nyxy Gee; my colleagues, Rick Hansen, Ginny Crisco, and Bo Wang; and the many good, hard working graduate student teaching assistants and adjunct teachers, such as Meredith Bulinski, Megan McKnight, Jocelyn Stott, Maryam Jamali, Holly Riding, Matthew Lance, among many others. We still have work to do, of course, but it’s exciting work, environment-changing work, and maybe, if we’re lucky, social justice work.

Notes

- In his discussion of “common sense” as separate from “philosophy,” Antonio Gramsci says that a philosophy of praxis “must be a criticism of ‘common sense,’” and should “renovat[e] and mak[e] ‘critical’ an already existing activity” (332). “Praxis” then is a dialectic, self-assessment: practices that are theorized and theorizing that become practices. (Return to text.)

- As I’ll explain shortly, we have essentially two options that fulfill the university writing requirement, which students choose from: (1) Engl 10, a semester long “accelerated” writing course; or (2) Engl 5A followed by Engl 5B, a year long stretch program. (Return to text.)

- The preponderance of research and scholarship in writing assessment shows that standardized tests for writing placement and proficiency are usually inadequate for local universities’ varied purposes and stakeholders (Huot; White; Yancey). The two largest national professional organizations in English instruction and writing assessment (i.e., The National Council of Teachers of English, NCTE, and the Council of Writing Program Administrators, WPA) have jointly published a “White Paper on Writing Assessment in Colleges and Universities” that acknowledges this research and promotes instead a contextual and site-based approach to writing assessment, which includes placement (NCTE-WPA Task Force on Writing Assessment). (Return to text.)

- DSP systems provide students with more control, agency, and responsibility for their writing courses and their work because they make the placement decision. Reviewing and analyzing several decades of research in the field of social cognitive learning, Erica Reynolds argues: “students with high-efficacy in relation to writing are indeed better writers than are their low self-efficacious peers” (91). In other words, when students have confidence about their writing course placement, they perform better as writers in those courses. Additionally, as the “Assessment Research Findings” section shows below, our DSP, particularly those students choosing option 2 (the stretch program) and identified by the university as “remedial,” achieve high retention rates. (Return to text.)

- Students get credit for the GE writing requirement through taking and passing English 5B and English 10. They get elective credit for English 5A and Linguistics 6. For a full explanation of these writing courses, see “The Structure of the Program” section. (Return to text.)

- Graff and Birkenstein use “conversation” as the metaphor that drives the templates given in this small textbook, each of which illustrate a rhetorical “move” that writers make in academic prose. The authors describe their textbook’s understanding of academic discourse, which our program adopted: “The central rhetorical move that we focus on in this book is the ‘they say / I say’ template that gives our book its title. In our view, this template represents the deep, underlying structure, the internal DNA as it were, of all effective argument. Effective persuasive writers do more than make well-supported claims (‘I say’); they also map those claims relative to the claims of others (‘they say’).” (xii) This is, effectively, Graff’s argument in other discussions of his, for instance, Beyond the Culture Wars. (Return to text.)

- All statistics about CSUF, the FYW program, and its students come from the program’s assessments or CSUF’s Office of Institutional Research, Assessment, and Planning (http://www.csufresno.edu/irap/index.shtml). (Return to text.)

- For a complete list and explanation of the program’s goals and outcomes, see my annual program assessment report at: http://www.csufresno.edu/english/programs/first_writing/ProgramAssessment.shtml. (Return to text.)

- To see the literature we provide students, parents, and advisors for course placement, see the First Year Writing information page on the CSU, Fresno English Department Web site: http://www.csufresno.edu/english/programs/first_writing/index.shtml. (Return to text.)

- See Section I of Belanoff and Dickson’s edited collection, Section II of Black, Daiker, Sommers, and Stygall’s collection, and Section II of Yancey and Wieser’s collection for accounts of how portfolios can be used to assess a program and provide rich learning environments for students. (Return to text.)

- You can find template syllabi and assignment sheets by course at the CSU First Year Writing Program’s Web site (http://www.csufresno.edu/english/programs/first_writing/index.shtml). (Return to text.)

- To view the video of student responses to commenting practices that we presented in our roundtable at CCCC in 2009, see: http://www.youtube.com/watch?v=-LA6nBFkNb8 (part 1) and http://www.youtube.com/watch?v=J_MqmBqgWqg&feature=channel (part 2). (Return to text.)

- See Ira Shor When Students Have Power for an early discussion of grading contracts; Bill Thelin’s “Understanding Problems in Critical Classrooms” for a description of one grading contract; Jane Danielewicz and Peter Elbow’s forthcoming essay on grading contracts for a discussion of the general, common features of most grading contracts, which can vary; and Cathy Spidell and Bill Thelin’s article, “Not Ready To Let Go: A Study Of Resistance To Grading Contracts,” for a qualitative study on student attitudes towards grading contracts. (Return to text.)

- For a more complete discussion of all the data collected, see my annual program assessment report listed in endnote #5 above. (Return to text.)

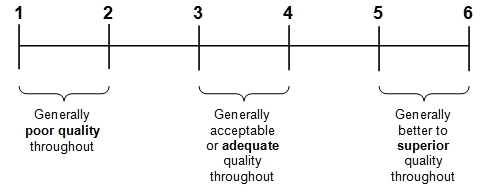

The scale used for all ratings of outcomes in student portfolios is described in our training and norming literature as: (1) consistently inadequate, of poor quality, and/or significantly lacking; (2) consistently inadequate, of poor quality, but occasionally showing signs of demonstrating competence; (3) adequate or of acceptable quality but inconsistent, showing signs of competence mingled with some problems; (4) consistently adequate and of acceptable quality, showing competence with perhaps some minor problems; (5) consistently good quality, showing clear competence with few problems, and some flashes of excellent or superior work; (6) mostly or consistently excellent/superior quality, shows very few problems and several or many signs of superior work. The following visual is used to help raters make decisions:

Raters are asked first to identify which larger conceptual bucket the portfolio fits into along the outcome in question (i.e., poor, adequate, or superior quality), then they decide if the portfolio is high or low bucket. This produces the rating. (Return to text.)

- IRAP’s report on passing and retention rates in our DSP program can be found on our English Department’s Web site. The report identifies “remedial students” at CSU, Fresno as: “students who tested into remediation based on their EPT status. In pre-DSP English, these students enrolled in Eng 1 along with a lab (Eng 1LA or 1LB). In the DSP program (beginning Fall 2006), they can enroll in either Eng 10 or the Eng 5A5B sequence.” (Return to text.)

Works Cited

Aronowitz, Stanley, and Henry A. Giroux. Postmodern Education: Politics, Culture, and Social Criticism. Minneapolis, MN: U of Minnesota P, 1991.

Black, Laurel, Donald A. Daiker, Jeffrey Sommers, and Gail Stygall, eds. New Directions in Portfolio Assessment: Reflective Practice, Critical Theory, and Large-Scale Scoring. Portsmouth, NH: Boynton/Cook, 1994.

Belanoff, Pat, and Peter Elbow. “State University of New York at Stony Brook Portfolio-Based Evaluation Program.” Pat Belanoff and Marcia Dickson, eds. Portfolios: Process and Product. Portsmouth, NH: Boynton/Cook, 1991. 3-16.

Danielewicz, Jane, and Peter Elbow. “A Unilateral Grading Contract to Improve Learning and Teaching.” CCC (forthcoming in 2010).

Delpit, Lisa. “The Politics of Teaching Literate Discourse.” Literacy: A Critical Sourcebook. Ellen Cushman, Eugene R. Kintgen, Barry M. Kroll, and Mike Rose, eds. Boston, New York: Bedford/St. Martin’s, 2001: 545-54.

Elbow, Peter, and Pat Belanoff. “Portfolios as a Substitute for Proficiency Examinations.” College Composition and Communication 37 (1986): 336-39.

Freire, Paulo, and Donald Macedo. Literacy: Reading the Word and the World. South Hadley, MA: Bergin and Garvey, 1987.

Gee, James Paul. “Literacy, Discourse, and Linguistics: Introduction and What Is Literacy?” Literacy: A Critical Sourcebook. Ellen Cushman, Eugene R. Kintgen, Barry M. Kroll, and Mike Rose, eds. Boston, New York: Bedford/St. Martin’s, 2001: 525-44.

Glau, Greg. “Stretch at 10: A Progress Report on Arizona State University’s Stretch Program.” Journal of Basic Writing 26.2 (2007): 30-48.

Graff, Gerald. Beyond the Culture Wars: How Teaching the Conflicts Can Revitalize American Education. New York and London: W. W. Norton, 1992 .

Graff, Gerald, and Cathy Birkenstein. They Say / I Say. New York: W. W. Norton, 2006.

Gramsci, Antonio. The Antonio Gramsci Reader: Selected Writings from 1916-1935. Ed. David Forgacs. New York: New York UP, 2000.

Hamp-Lyons, Liz, and William Condon. Assessing The Portfolio: Principles For Practice, Theory, And Research. Cresskill: Hampton P, 2000.

Haswell, Rich. Ed. Beyond Outcomes: Assessment and Instruction Within a University Writing Program. Westport, CT: Ablex Publishing, 2001.

Huot, Brian. (Re) Articulating Writing Assessment for Teaching and Learning. Logan, UT: Utah State UP, 2002.

NCTE-WPA Task Force on Writing Assessment. “NCTE-WPA White Paper on Writing Assessment in Colleges and Universities.” Online posting. Council of Writing Program Administrators. 31 Aug 2008 <http://wpacouncil.org/whitepaper>.

Reynolds, Erica. “The Role of Self-Efficacy in Writing and Directed Self-Placement.” Directed Self-Placement: Principles and Practices. Eds. Daniel Royer and Robert Gilles. Cresskill, NJ: Hampton P, 2003. 73-103.

Royer, Daniel J., and Roger Gilles. “Directed Self-Placement: An Attitude of Orientation.” CCC 50.1 (1998): 54-70

Royer, Daniel J., and Roger Gilles. Directed Self-Placement: Principles and Practices. Cresskill, NJ: Hampton P, 2003.

Shor, Ira. When Students Have Power: Negotiating Authority in a Critical Pedagogy. Chicago: University of Chicago Press, 1996.

Spidell, Cathy and Willam H. Thelin. “Not Ready to Let Go: A Study of Resistance to Grading Contracts.” Composition Studies 34.1 (Spring 2006): 36-68.

Thelin, William H. “Understanding Problems in Critical Classrooms.” CCC 57: 1 (September 2005): 114-141.

Volosinov, Victor N. Marxism and The Philosophy of Language. Trans. Ladislav Matejka and I. R. Titunik. Cambridge and London: Harvard UP, 1973.

White, Edward M. Developing Successful College Writing Programs. San Francisco and London: Jossey-Bass, 1989.

Yancey, Kathleen Blake. “Looking Back as We Look Forward: Historicizing Writing Assessment as a Rhetorical Act.” CCC 50.3 (February 1999): 483-503.

Yancey, Kathleen Blake, and Irwin Weiser, eds. Situating Portfolios: Four Perspectives. Logan, UT: Utah State UP, 1997.

“Self-Assessment As Programmatic Center” from Composition Forum 20 (Summer 2009)

Online at: http://compositionforum.com/issue/20/calstate-fresno.php

© Copyright 2009 Asao B. Inoue.

Licensed under a Creative Commons Attribution-Share Alike License.

Return to Composition Forum 20 table of contents.